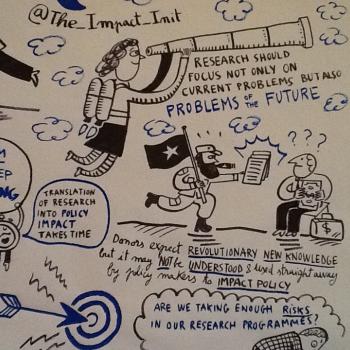

How social science research contributes to solving real world problems has always been a concern for researchers. Few people study social ills like poverty without wishing to contribute to policies and programmes which help those affected. So it's great that the impact of research is receiving more attention than ever before. I'm just back from a conference, organised by IDS and the Impact Initiative and set up to learn lessons from 10 years of research on poverty on funded by DFID-ESRC. There was lots presented and discussed at the conference (the story is online and is pretty quick to absorb being set out in the medium of twitter)

There is a lot that matters here. It is to the credit of DFID and others that they have been strong supporters of building a better evidence base for public policy. These arguments are well rehearsed - look for example at the LSEs brilliant impact of the social sciences blog. Public policy without good evidence is an expensive shot in the dark but experience shows good research does not automatically lead to change. To think research would automatically result in policy change ignores all sorts of issues of politics and pragmatism (e.g. ideology, policy interest cycles, competing agendas, timing, financing, feasibility, capacity and luck). And there is a further challenge of research attribution, since research sits alongside all sorts of other inputs to the policy process, and to ignore these is both naive and underplays the importance of national policy making processes.

There is, of course, a 'big' question of what research impact actually is. The ESRC are helpful on this, they see research impact as

- Conceptual - changing ideas which then can lead to better understandings and new solutions

- Instrumental - that policy or programming change happens as a direct consequence of research

- Capacity building - building new skills or capacities

Young Lives Theory of Change emphasizes the different channels (research, uptake, innovation and capacity building) through which we see impact happening. All of those types of impacts matter, but while research by definition probably all contributes to capacity building, researchers often think more about conceptual impact; and funders think about more tangible instrumental impact. In thinking about how researchers have impact (or make a contribution), I have three take away points (my slides from one of the conference sessions are on slideshare)

First, identifying policy demand and thinking politically. One of the first key note speakers, argued for the importance of researchers to identify the policy demand for research and, while not excluding the importance of conceptual contributions argued that "curiosity driven research is unlikely to deliver focused development impact". Young Lives recognises the importance of thinking politically to understand, and to meet the policy demand for evidence. This particularly encourages us to work in alliances with other national or international organisations, since these organisations may be closer to policy debates to help ensure our research makes an effective contribution. Equally there needs to be space for other approaches. To be only instrumental to an existing policy debate precludes breaking out of existing frames. As an example the so- called Heckman curve is fundamental in motivating a growing wave of investments in preschool interventions. The (huge) benefit of this analysis is precisely that it changes minds to new options, not only that it services existing agendas.

Second, valuing 'pathways to impact'. It is tempting to judge research by direct impact, but that isn't necessarily the best approach. The ESRC use the useful language of pathways to impact. Even the best research may not pay off in a straightforward and timely way for all the reasons noted above. Better to ensure a sound theory of change likely to help research reach the right audiences in a timely way. Valuing achieved impact over these types of pathways it risks valuing luck as much as effort. Incidentally, for cohort studies this may be a particular issue - a couple of weeks ago an excellent book (The Life Project) was published discussing the enormous contribution made by the 1946 British Cohort Study. It is unlikely that waiting 70 years to evaluate impact would appeal for funders.

Third, identifying conceptual impacts. There was much discussion in the conference between conceptual and instrumental impacts. I've already argued both matter. And while it is tempting to say that the role of research is to develop new ideas and new arguments, it is clearly not enough to say this without then providing some sense of how that impact can be judged - research like Young Lives is often funded by public money and so we have an accountability to show the difference made. One of the most interesting take aways for me was in thinking about how that type of vital, but intangible and long term, impact can be assessed. One argument put forward was to find better ways of assessing the ways in which the language used in debates shifts following research. Right on cue on my return I see this from IDS, piggy backing on twitter to provide a tool to see how discourse is shifting. To do that properly would clearly be a serious challenge but we need better tools and approaches to demonstrate conceptual impacts.

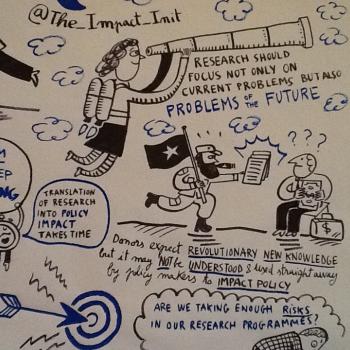

How social science research contributes to solving real world problems has always been a concern for researchers. Few people study social ills like poverty without wishing to contribute to policies and programmes which help those affected. So it's great that the impact of research is receiving more attention than ever before. I'm just back from a conference, organised by IDS and the Impact Initiative and set up to learn lessons from 10 years of research on poverty on funded by DFID-ESRC. There was lots presented and discussed at the conference (the story is online and is pretty quick to absorb being set out in the medium of twitter)

There is a lot that matters here. It is to the credit of DFID and others that they have been strong supporters of building a better evidence base for public policy. These arguments are well rehearsed - look for example at the LSEs brilliant impact of the social sciences blog. Public policy without good evidence is an expensive shot in the dark but experience shows good research does not automatically lead to change. To think research would automatically result in policy change ignores all sorts of issues of politics and pragmatism (e.g. ideology, policy interest cycles, competing agendas, timing, financing, feasibility, capacity and luck). And there is a further challenge of research attribution, since research sits alongside all sorts of other inputs to the policy process, and to ignore these is both naive and underplays the importance of national policy making processes.

There is, of course, a 'big' question of what research impact actually is. The ESRC are helpful on this, they see research impact as

- Conceptual - changing ideas which then can lead to better understandings and new solutions

- Instrumental - that policy or programming change happens as a direct consequence of research

- Capacity building - building new skills or capacities

Young Lives Theory of Change emphasizes the different channels (research, uptake, innovation and capacity building) through which we see impact happening. All of those types of impacts matter, but while research by definition probably all contributes to capacity building, researchers often think more about conceptual impact; and funders think about more tangible instrumental impact. In thinking about how researchers have impact (or make a contribution), I have three take away points (my slides from one of the conference sessions are on slideshare)

First, identifying policy demand and thinking politically. One of the first key note speakers, argued for the importance of researchers to identify the policy demand for research and, while not excluding the importance of conceptual contributions argued that "curiosity driven research is unlikely to deliver focused development impact". Young Lives recognises the importance of thinking politically to understand, and to meet the policy demand for evidence. This particularly encourages us to work in alliances with other national or international organisations, since these organisations may be closer to policy debates to help ensure our research makes an effective contribution. Equally there needs to be space for other approaches. To be only instrumental to an existing policy debate precludes breaking out of existing frames. As an example the so- called Heckman curve is fundamental in motivating a growing wave of investments in preschool interventions. The (huge) benefit of this analysis is precisely that it changes minds to new options, not only that it services existing agendas.

Second, valuing 'pathways to impact'. It is tempting to judge research by direct impact, but that isn't necessarily the best approach. The ESRC use the useful language of pathways to impact. Even the best research may not pay off in a straightforward and timely way for all the reasons noted above. Better to ensure a sound theory of change likely to help research reach the right audiences in a timely way. Valuing achieved impact over these types of pathways it risks valuing luck as much as effort. Incidentally, for cohort studies this may be a particular issue - a couple of weeks ago an excellent book (The Life Project) was published discussing the enormous contribution made by the 1946 British Cohort Study. It is unlikely that waiting 70 years to evaluate impact would appeal for funders.

Third, identifying conceptual impacts. There was much discussion in the conference between conceptual and instrumental impacts. I've already argued both matter. And while it is tempting to say that the role of research is to develop new ideas and new arguments, it is clearly not enough to say this without then providing some sense of how that impact can be judged - research like Young Lives is often funded by public money and so we have an accountability to show the difference made. One of the most interesting take aways for me was in thinking about how that type of vital, but intangible and long term, impact can be assessed. One argument put forward was to find better ways of assessing the ways in which the language used in debates shifts following research. Right on cue on my return I see this from IDS, piggy backing on twitter to provide a tool to see how discourse is shifting. To do that properly would clearly be a serious challenge but we need better tools and approaches to demonstrate conceptual impacts.